Part 1 - AI Lab - Understanding Ollama

Your Personal AI Assistant That Respects Your Privacy

Picture this: A sleek, lightweight application that can run state-of-the-art AI models with the same power as commercial services, but right on your own machine. That’s Ollama in a nutshell – a bridge between cutting-edge AI research and everyday practical use.

What is Ollama and Why It Matters

Ollama is an open-source project that simplifies running large language models (LLMs) locally. Think of it as a specialized container that handles all the complexity of running these sophisticated neural networks, making them accessible through simple commands. It’s to AI what Docker was to application deployment – a game-changing simplification of a previously complex process.

Unlike cloud-based AI services, Ollama puts you in the driver’s seat. Your data never leaves your machine, your prompts aren’t used to train someone else’s models, and once downloaded, you can run your models without an internet connection.

How Ollama Works

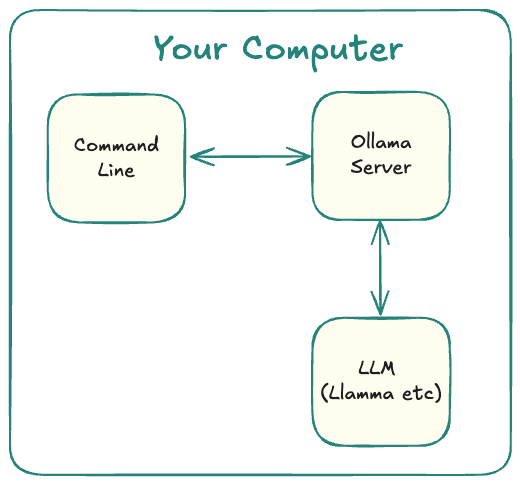

At its core, Ollama functions as an elegant wrapper around complex machine learning infrastructure. Here’s a simplified view of its architecture:

When you interact with Ollama, you’re communicating with a local server that manages the loading of models into memory, handles inference (generating predictions from your input), and provides a consistent interface regardless of which specific model you’re using.

The Advantages of Going Local

Running models locally with Ollama offers several compelling advantages:

-

Complete Privacy: Your conversations, documents, and queries never leave your machine.

-

No Subscription Costs: Once you’ve downloaded a model, it’s yours to use as much as you want.

-

Customization: You can fine-tune models for specific use cases or modify parameters to prioritize speed or accuracy.

-

Offline Operation: No internet? No problem. Your AI assistant works regardless.

-

Learning Opportunity: Working directly with these models gives you deeper insights into how they function.

Comparison with Other Solutions

While there are other ways to run models locally (like LM Studio, LocalAI, or direct from Python), Ollama stands out for its balance of simplicity and power. It doesn’t require Python knowledge, offers a clean API for developers, and focuses on making the experience as frictionless as possible.

Compared to cloud services like ChatGPT Plus or Claude, you’re trading some convenience (no automatic updates) and potentially some capability (depending on your hardware) for greater control, privacy, and the elimination of ongoing costs.

The bottom line? Ollama isn’t just a tool – it’s a statement about the future of AI. A future where these powerful technologies aren’t just services we subscribe to, but capabilities we own and control.

Ready to bring this power to your machine? In the next post, we’ll cover the installation process to get you up and running with your own personal AI lab.